2023. január 16., hétfő

New Arrival 9.

2023. január 9., hétfő

AS/400 and me

EPROM burning 21/25V 3. - Closing

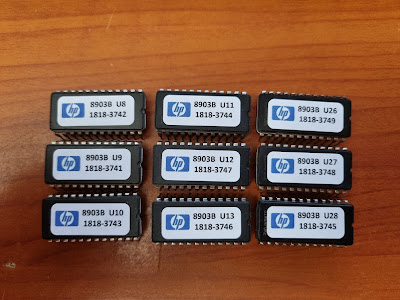

- The TL866II is unable to handle any device programable above 18V

- My HP 8903B has 21V EPROMs and I wanted to refresh them

- The T56 programmers cost are way above the amount I would pay for this

2023. január 3., kedd

Azure, AKS, Terraform, proxy

Happy New Year to everybody!

It maybe unexpected, but I start the year with some professional content and not my hobbies.

When you try to deploy an Azure Kubernetes Cluster from Terraform, and the cluster has proxy configuration, on any terraform apply on the cluster will cause redeployment, what is not what you expect.

The reason is that the Azure mechanism add a few addresses to the no_proxy list in addition to the ones you set. Changing the no_proxy list will force recreation. The list stored in the state will always be different from the list on the AKS resource itself.

First let check, what are those addresses:

- localhost and 127.0.0.1 - need no explanation, it is the VM loopback address

- konnectivity - Kubernetes Konnectivity service

- 168.63.129.254 - Azure platform resources: https://learn.microsoft.com/en-us/azure/virtual-network/what-is-ip-address-168-63-129-16

- 169.254.169.254 - Azure Instance Metadata Service: https://learn.microsoft.com/en-us/azure/virtual-machines/linux/instance-metadata-service

- Address space of the VNET where the cluster installed

- pod_cidr of the AKS cluster. Default: 10.244.0.0/16

- service_cidr of the AKS cluster. Default: 10.0.0.0/16

- If it is a private cluster, cluster's private_fqdn. It looks like this: <clustername>-<8 hex random number>.<DNS Zone Guid>.privatelink.<region>.azmk8s.io

The values above, can be known before the cluster creation, except the last one. This is created during the deployment of the cluster, so adding all of the items to your no_proxy list before the cluster creation will not help, if you have a private cluster.

What you can do:

Add lifecycle management to the cluster. It will look like this:

This would work, but you just half way to the solution.

When you reapply your plan, it will not recreate the AKS cluster. But what if you willingly change the no_proxy parameters? In this case this will still ignored and the AKS doesn't recreate, what is not the expected behavior.

Lets assume, you heave the user provided no_proxy parameters in a no_proxy variable:

The lifecycle management is able to trigger recreation of the resource with replace_triggered_by property. The problem with it, that the variable above, can't be the source of the trigger. But for example a resource can.

Here comes a dirty trick. How convert a variable list into a resource?

Hashicorp has a Terraform provider named tfcoremock (https://registry.terraform.io/providers/hashicorp/tfcoremock/latest/docs). I'll use it here.

First add it to the providers list:

Now, we can store the list above into the state:

Now, we can reference it from the replace_triggered_by:

This is almost perfect, but not completely.

When you change any element in the no_proxy variable list, it will trigger the replacement, but if you add, or remove element from the list, it will ignore it.

One last step. Make the length of the list into work:

Now, it is replace the AKS on any change in the no_proxy variable list, but keep it intact otherwise.